Spark Server Configuration

A dedicated GDX Spark server was prepared to support AI and machine learning workloads.

Hardware resources verification:

NVIDIA GPU drivers (version 580) were installed and validated using nvidia-smi.

Output form Nvidia-smi:

Additional required dependencies were verified and installed to ensure compatibility with Spark and AI workloads.

Model Provisioning

The Hugging Face CLI was installed to allow manual model downloads.

curl -LsSf https://hf.co/cli/install.sh | bash

Models were deployed using Docker containers:

- OPT model

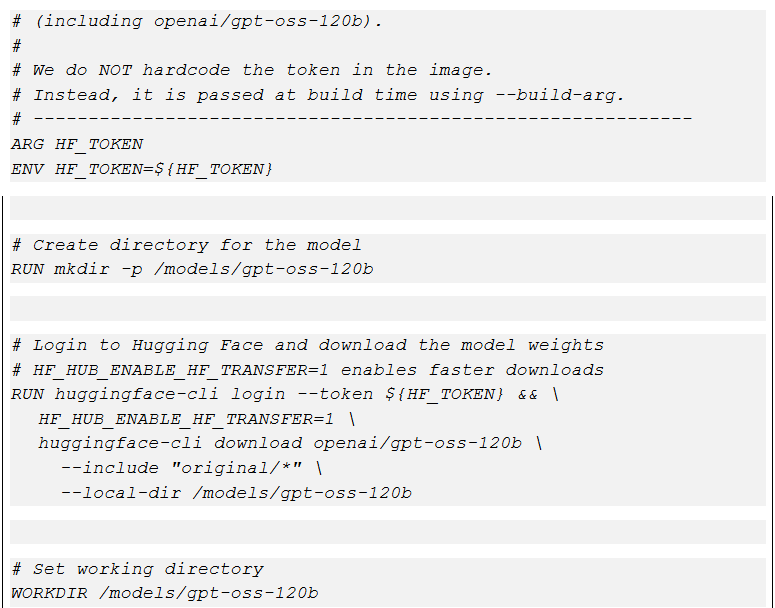

Dockerfile:

- Embedding (EBM) model

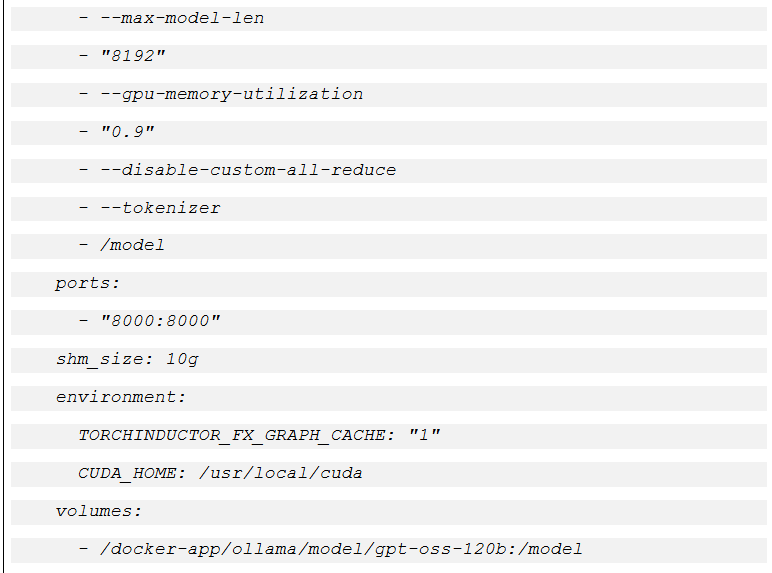

Dockerfile:

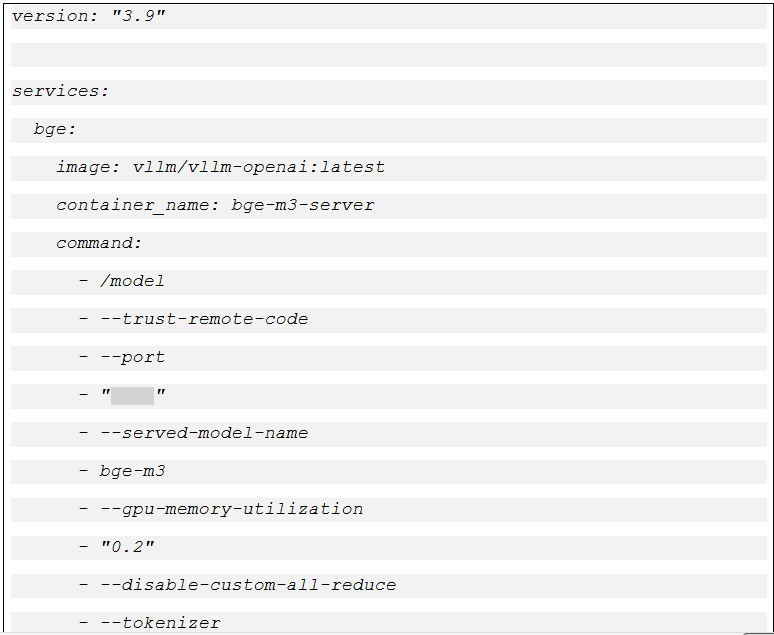

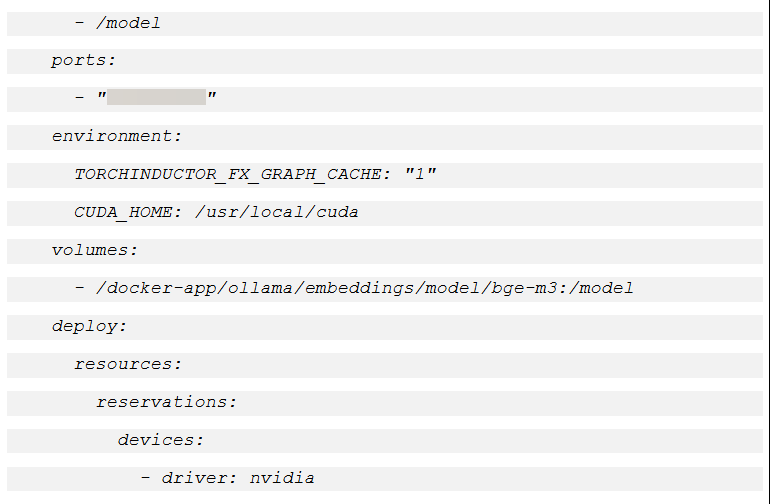

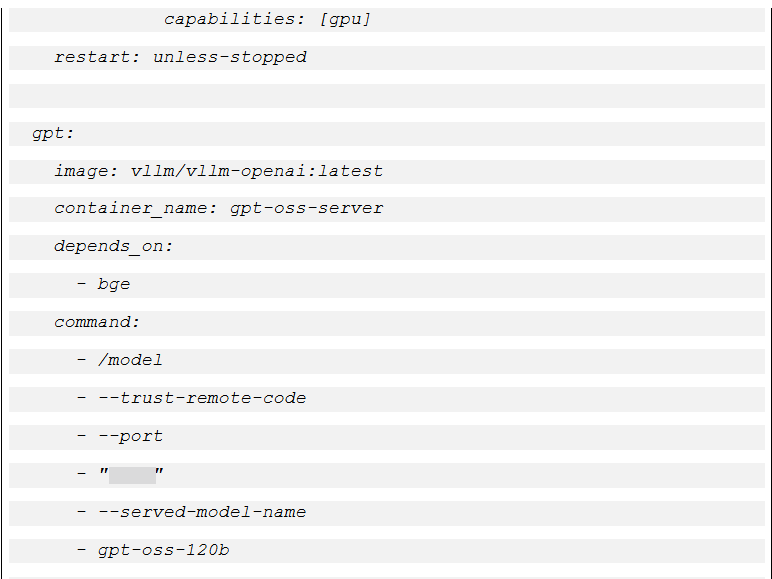

Dedicated Dockerfiles were created, and a Docker Compose configuration was used to run both services.